Existential Redundancy is what happens when the world keeps running smoothly—and you slowly realize it no longer needs you to keep the lights on. It isn’t unemployment; it’s obsolescence with benefits. Machines cook your meals, balance your passwords, drive your car, curate your entertainment, and tuck you into nine hours of perfect algorithmic sleep. Your life becomes a spa run by robots: efficient, serene, and quietly humiliating. Comfort increases. Consequence disappears. You are no longer relied upon, consulted, or required—only serviced. Meaning thins because it has always depended on friction: being useful to someone, being necessary somewhere, being the weak link a system cannot afford to lose. Existential Redundancy names the soft panic that arrives when efficiency outruns belonging and you’re left staring at a world that works flawlessly without your fingerprints on anything.

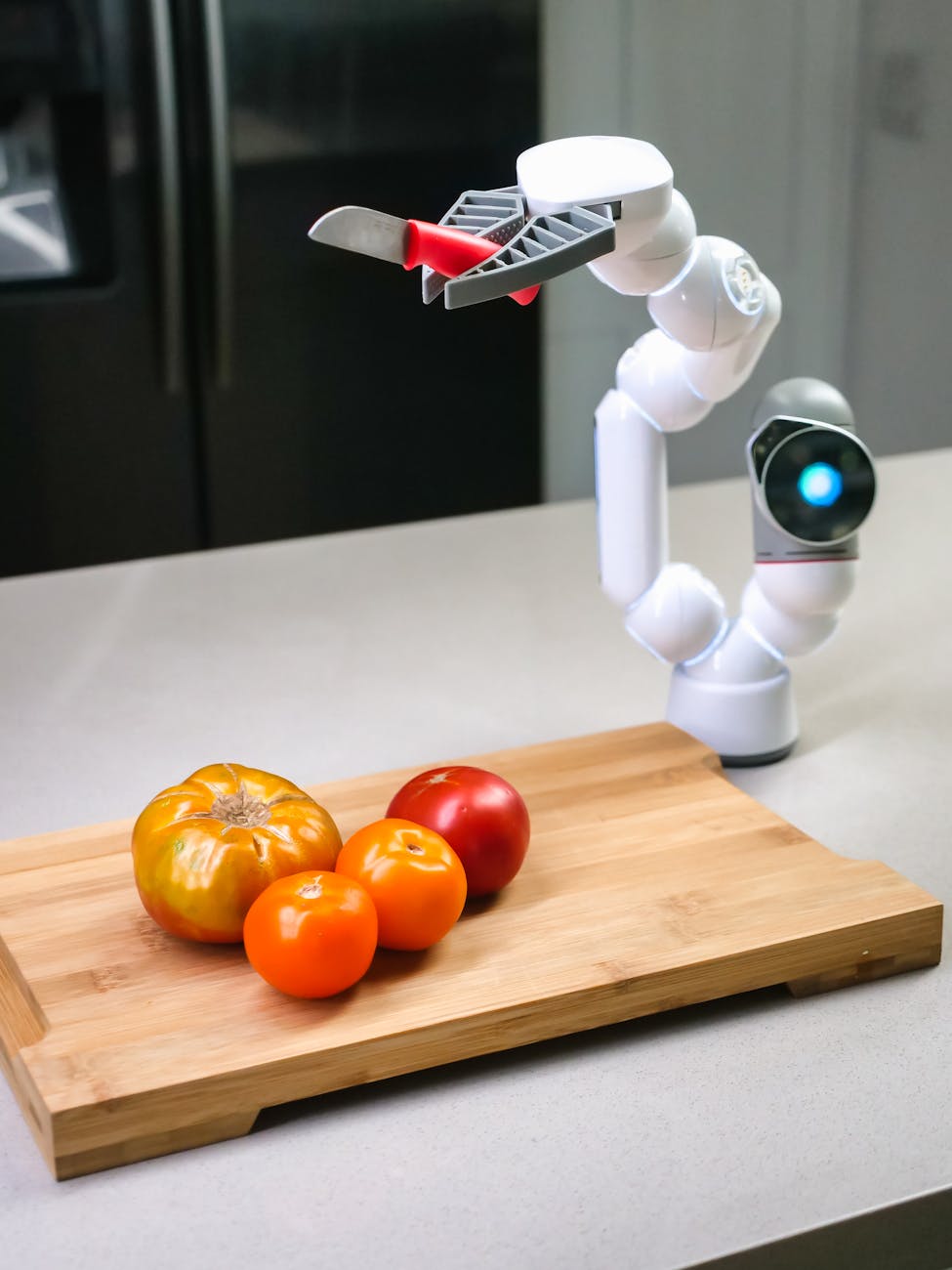

Picture the daily routine. A robot prepares pasta with basil hand-picked by a drone. Another cleans the dishes before you’ve even tasted dessert. An app shepherds you into perfect sleep. A driverless car ferries you through traffic like a padded cell on wheels. Screens bloom on every wall in the name of safety, insurance, and convenience, until privacy becomes a fond memory you half suspect you invented. You have time—oceans of it. But you are not a novelist or a painter or anyone whose passions demand heroic labor. You are intelligent, capable, modestly ambitious, and suddenly unnecessary. With every task outsourced and every risk eliminated, the old question—What do you do with your life?—mutates into something colder: Where do you belong in a system that no longer needs your hands, your judgment, or your effort?

So humanity does what it always does when it feels adrift: it forms support groups. Digital circles bloom overnight—forums, wellness pods, existential check-ins—places to talk about the hollow feeling of being perfectly cared for and utterly unnecessary. But even here, the machines step in. AI moderates the sessions. Bots curate the pain. Algorithms schedule the grief and optimize the empathy. Your confession is summarized before it lands. Your despair is tagged, categorized, and gently rerouted toward a premium subscription tier. Therapy becomes another frictionless service—efficient, soothing, and devastating in its implication. You sought human connection to escape redundancy, and found yourself processed by the very systems that made you redundant in the first place. In the end, even your loneliness is automated, and the final insult arrives wrapped in flawless customer service: Thank you for sharing. Your feelings have been successfully handled.