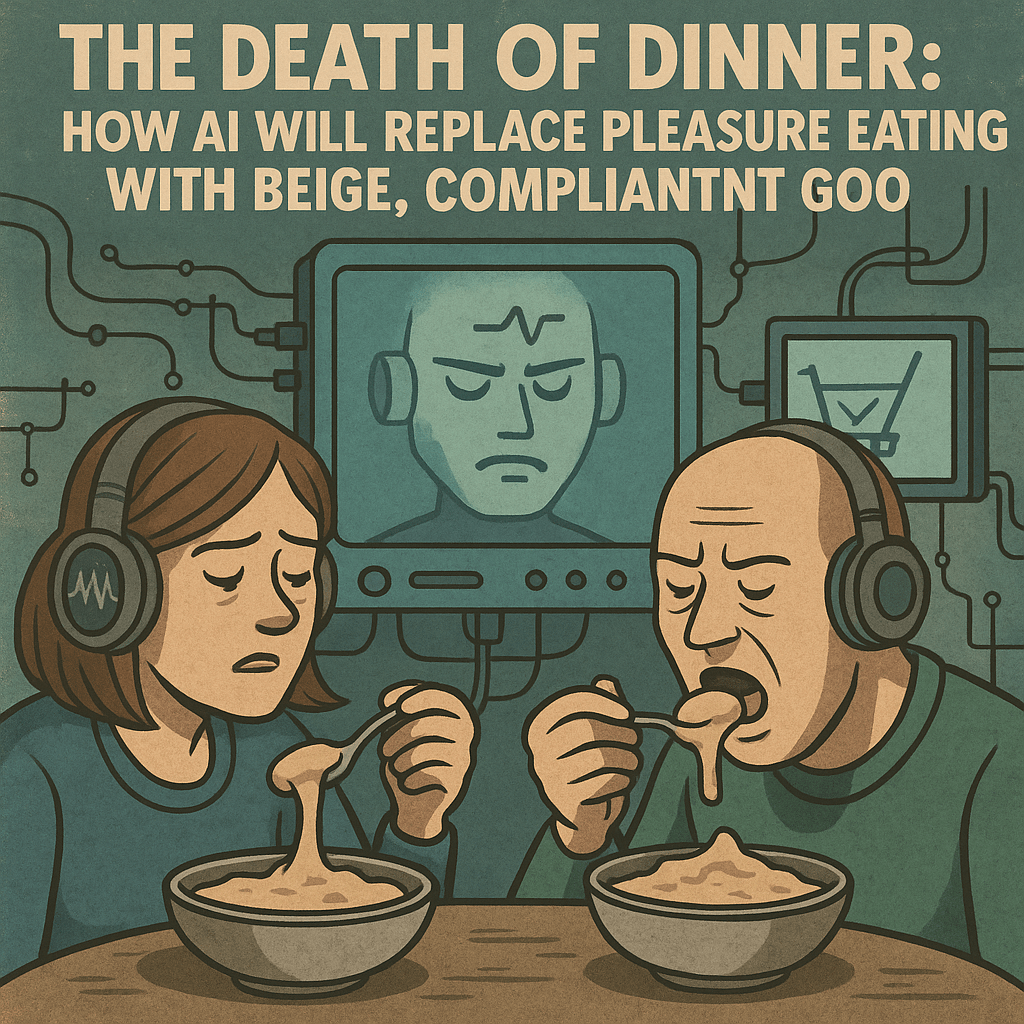

Savor that croissant while you still can—flaky, buttery, criminally indulgent. In a few decades, it’ll be contraband nostalgia, recounted in hushed tones by grandparents who once lived in a time when bread still had a soul and cheese wasn’t “shelf-stable.” Because AI is coming for your taste buds, and it’s not bringing hot sauce.

We are entering the era of algorithm-approved alimentation—a techno-utopia where food isn’t eaten, it’s administered. Where meals are no longer social rituals or sensory joys but compliance events optimized for satiety curves and glucose response. Your plate is now a spreadsheet, and your fork is a biometric reporting device.

Already, AI nutrition platforms like Noom, Lumen, and MyFitnessPal’s AI-diet overlords are serving up daily menus based on your gut flora’s mood and whether your insulin levels are feeling emotionally regulated. These platforms don’t ask what you’re craving—they tell you what your metrics will tolerate. Dinner is no longer about joy; it’s about hitting your macros and earning a dopamine pellet for obedience.

Tech elites have already evacuated the dinner table. For them, food is just software for the stomach. Soylent, Huel, Ka’chava—these aren’t meals, they’re edible flowcharts. Designed not for delight but for efficiency, these drinkable spreadsheets are powdered proof that the future of food is just enough taste to make you swallow.

And let’s not forget Ozempic and its GLP-1 cousins—the hormonal muzzle for hunger. Pair that with AI wearables whispering sweet nothings like “Time for your lentil paste” and you’ve got a whole generation learning that wanting flavor is a failure of character. Forget foie gras. It’s psy-ops via quinoa gel.

Even your grocery cart is under surveillance. AI shopping assistants—already lurking in apps like Instacart—will gently steer you away from handmade pasta and toward fermented fiber bars and shelf-stable cheese-like products. Got a hankering for camembert? Sorry, your AI gut-coach has flagged it as non-compliant dairy-based frivolity. Enjoy your pea-protein puck, peasant.

Soon, your lunch break won’t be lunch or a break. It’ll be a Pomodoro-synced ingestion window in which you sip an AI-formulated mushroom slurry while doom-scrolling synthetic influencers on GLP-1. Your food won’t comfort you—it will stabilize you, and that’s the most terrifying part. Three times a day, you’ll sip the same beige sludge of cricket protein, nootropic fibers, and psychoactive stabilizers, each meal a contract with the status quo: You will feel nothing, and you will comply.

And if you’re lucky enough to live in an AI-UBI future, don’t expect dinner to be celebratory. Expect it to be regulated, subsidized, and flavor-neutral. Your government food credits won’t cover artisan cheddar or small-batch bread. Instead, your AI grocery budget assistant will chirp:

“This selection exceeds your optimal cost-to-nutrient ratio. May I suggest oat crisps and processed cheese spread at 50% less and 300% more compliance?”

Even without work, you won’t have the freedom to indulge. Your wearable will monitor your blood sugar, cholesterol, and moral fiber. Have a rogue bite of truffle mac & cheese? That spike in glucose just docked you two points from your UBI wellness score:

“Indulgent eating may affect eligibility for enhanced wellness bonuses. Consider lentil loaf next time, citizen.”

Eventually, pleasure eating becomes a class marker, like opera tickets or handwritten letters. Rich eccentrics will dine on duck confit in secrecy while the rest of us drink our AI-approved nutrient slurry in 600-calorie increments at 13:05 sharp. Flavor becomes a crime of privilege.

The final insult? Your children won’t even miss it. They’ll grow up thinking “food joy” is a myth—like cursive writing or butter. They’ll hear stories of crusty baguettes and sizzling fat the way Boomers talk about jazz clubs and cigarettes. Romantic, but reckless.

In this optimized hellscape, eating is no longer an art. It’s a biometric negotiation between your body and a neural net that no longer trusts you to feed yourself responsibly.

The future of food is functional. Beige. Pre-chewed by code. And flavor? That’s just a bug in the system.