Optimization without integration produces a lopsided human being, and the AI age intensifies this distortion by overrewarding what can be optimized, automated, and displayed. Systems built on speed, output, and measurable performance train us to chase visible gains while starving the slower capacities that make those gains usable in real life. The result is a person who can execute flawlessly in one narrow lane yet falters the moment the situation becomes human—ambiguous, emotional, unscripted. The body may be sculpted while the self remains adolescent; the résumé gleams while judgment dulls; productivity accelerates while meaning evaporates. AI tools amplify this imbalance by making optimization cheap and frictionless, encouraging rapid improvement without requiring maturation, reflection, or integration. What emerges is not an unfinished person so much as an unevenly finished one—overdeveloped in what can be measured and underdeveloped in what must be lived. The tragedy is not incompetence but imbalance: strength without wisdom, speed without direction, polish without presence. In an age obsessed with optimization, what looks like progress is often a subtler form of arrested development.

To encourage you to interrogate your own tendencies to achieve optimization without integration, write a 500–word personal narrative analyzing a period in your life when you aggressively optimized one part of yourself—your body, productivity, grades, skills, image, or output—while neglecting the integration of that growth into a fuller, more functional self.

Begin by narrating the specific context in which optimization took hold. Describe the routines, metrics, sacrifices, and rewards that drove your improvement. Use concrete, sensory detail to show what was gained: strength, speed, recognition, efficiency, status, or validation. Make the optimization legible through action rather than abstraction.

Then pivot. Identify the moment—or series of moments—when the imbalance became visible. What failed to develop alongside your optimized trait? Social competence? Emotional maturity? Judgment? Confidence? Meaning? Show how this lack of integration surfaced in a lived encounter: a conversation you couldn’t sustain, an opportunity you mishandled, a relationship you sabotaged, or a realization that exposed the limits of your progress.

By the end of the essay, articulate what optimization without integration cost you. Do not reduce this to a moral lesson or self-help platitude. Instead, reflect on what this experience taught you about human development itself: why improving a single dimension of the self can create distortion rather than wholeness, and how true growth requires coordination between capacity, character, and context.

Your goal is not confession or nostalgia but clarity. Show how a life can look impressive on the surface while remaining structurally incomplete—and what it takes to move from optimization toward integration.

Avoid clichés about “balance” or “being well-rounded.” This essay should demonstrate insight through specificity, humor, and honest self-assessment. Let the reader see the mismatch before you explain it.

As a model for the assignment, consider the following self-interrogation—a case study in optimization gone feral and integration nowhere to be found.

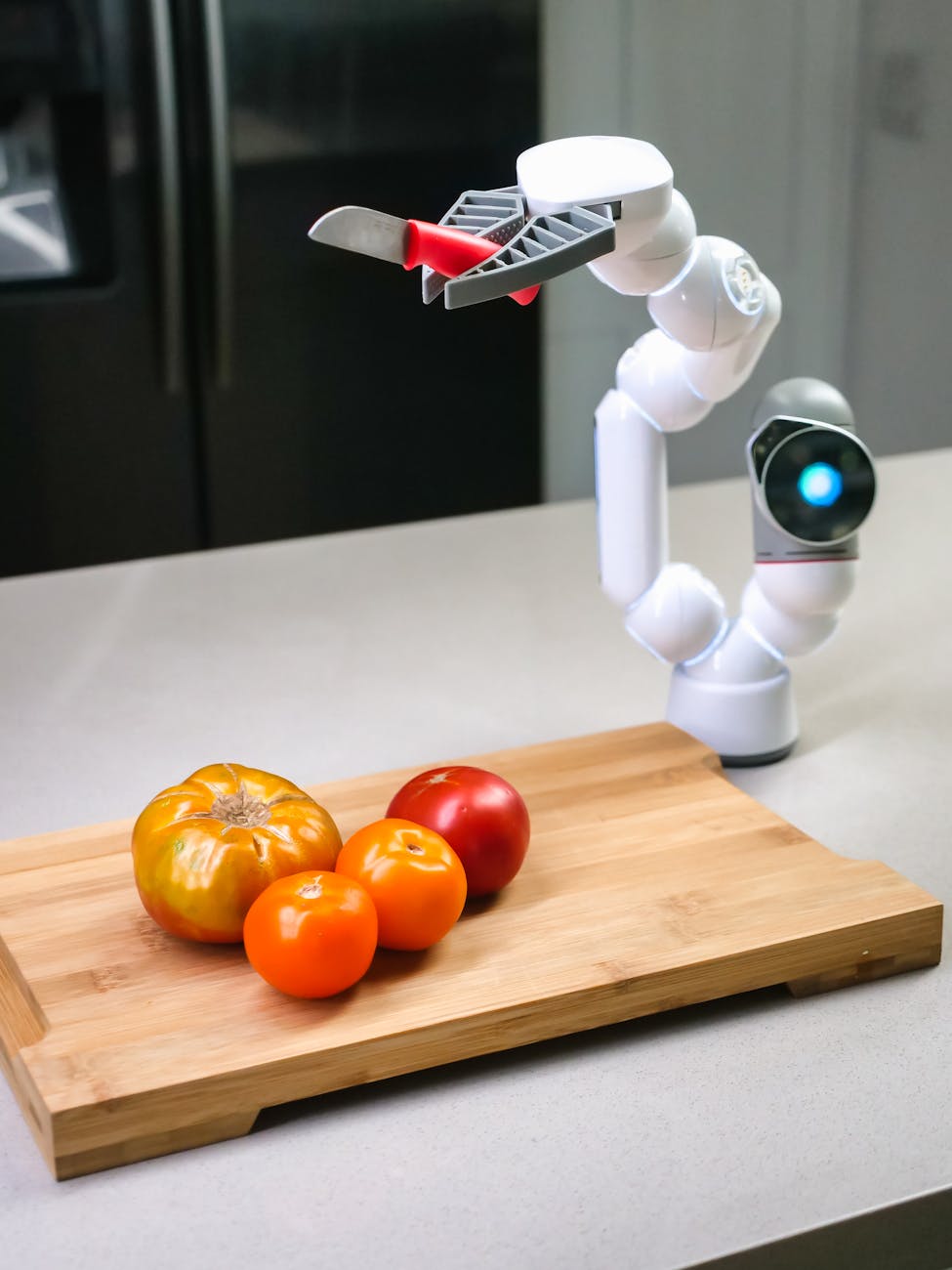

At nineteen, I fell into a job at UPS, where they specialized in turning young men into over-caffeinated parcel gladiators. Picture a cardboard coliseum where bubble wrap was treated like a minor deity and the only sacrament was speed. My assignment was simple and brutal: load 1,200 boxes an hour into trailer walls so tight and elegant they could’ve qualified for Olympic Tetris. Five nights a week, from eleven p.m. to three a.m., I lived under fluorescent lights, sprinting on concrete, powered by caffeine, testosterone, and a belief that exhaustion was a personality trait. Without meaning to, I dropped ten pounds and watched my body harden into something out of a comic book—biceps with delusions of automotive lifting.

This mattered because my early bodybuilding career had been a public embarrassment. At sixteen, I competed in the Mr. Teenage Golden State in Sacramento, smooth as a marble countertop and just as defined. A year later, at the Mr. Teenage California in San Jose, I repeated the humiliation, proving that consistency was my only strength. I refused to let my legacy be “promising kid, zero cuts.” Now, thanks to UPS cardio masquerading as labor, I watched striations appear like divine handwriting. Redemption no longer seemed possible; it felt scheduled.

So I did what any responsible nineteen-year-old bodybuilder would do: I declared war on carbohydrates. I starved myself with religious fervor and trained like a man auditioning for sainthood. By the time the 1981 Mr. Teenage San Francisco rolled around at Mission High School, I had achieved what I believed was human perfection—180 pounds of bronzed, veined, magazine-ready beefcake. The downside was logistical. My clothes no longer fit. They hung off me like a visual apology. This triggered an emergency trip to a Pleasanton mall, where I entered a fitting room that felt like a shrine to Joey Scarbury’s “Theme from The Greatest American Hero,” the soundtrack of peak Reagan-era delusion.

While changing behind a curtain so thin it offered plausible deniability rather than privacy, I overheard two young women working the store arguing—audibly—about which one should ask me out. Their voices escalated. Stakes rose. I imagined them staging a full WWE brawl among the racks: flying elbows, folding chairs, all for the right to split a breadstick with me at Sbarro. This, I thought, was the payoff. This was what discipline looked like.

And then—nothing. I froze. I adopted an aloof, icy expression so effective it could’ve extinguished a bonfire. The women scattered, muttering about my arrogance, while I stood there in my Calvin Kleins, immobilized by the very attention I had trained for. I had optimized everything except the part of me required to be human.

For a brief, shimmering window, I possessed the body of a Greek god and the social competence of a malfunctioning Atari joystick. I looked like James Bond and interacted like a background extra waiting for direction. Beneath the Herculean exterior was a hollow shell—a construction site abandoned mid-project, rusted scaffolding still up, a plywood sign nailed crookedly to the entrance: SORRY, WE’RE CLOSED.